Enterprise AI Platforms Built by HPE, Lenovo, and Supermicro

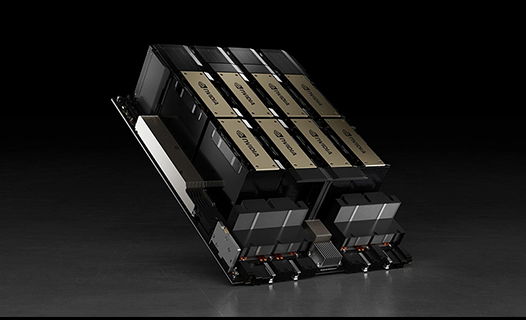

(NVIDIA HGX Systems)

The foundation for AI infrastructure - high-performance GPU-accelerated computing platforms designed for the most demanding AI and HPC workloads.

NVIDIA HGX is the foundational GPU-accelerated platform that powers the world's leading AI systems. From the latest Blackwell architecture to proven Hopper designs, Enterprise AI Platforms combine cutting-edge GPUs, high-speed NVLink interconnects, and optimized system designs to deliver exceptional performance for AI training, inference, and high-performance computing workloads.

AIdeology offers the complete range of NVIDIA HGX-based systems through our partnerships with leading server manufacturers, providing organizations with flexible options to build their AI infrastructure at any scale.

Latest generation Enterprise AI Platform featuring eight B200 GPUs linked by 5th-generation NVLink switches for unprecedented performance.

144 PFLOPS FP4 compute capability

144 PFLOPS FP4 compute capability

1.44 TB pooled HBM3e memory

1.44 TB pooled HBM3e memory

5th-gen NVLinkswitches

5th-gen NVLinkswitches

Most powerful Enterprise AI Platform with sixteen Blackwell Ultra GPUs wired into a single NVLink-5 domain for maximum performance.

2.3 TB HBM3e on board

2.3 TB HBM3e on board

≈11× faster LLM inference vs HGX H100

≈11× faster LLM inference vs HGX H100

1.8 TB/s NVLink interconnect bandwidth

1.8 TB/s NVLink interconnect bandwidth

Enhanced Hopper architecture with significantly increased memory capacity for large language models and complex AI workloads.

1.1 TB aggregate memory capacity

1.1 TB aggregate memory capacity

~1.9× faster Llama-2 70B inference vs H100

~1.9× faster Llama-2 70B inference vs H100

Drop-in compatible with Hopper servers

Drop-in compatible with Hopper servers

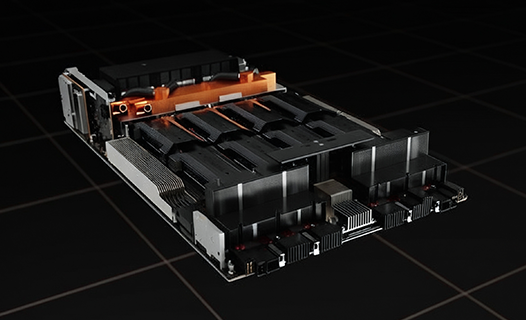

Proven workhorse for AI training and inference with broad ecosystem support across all major OEM platforms.

640 GB HBM3 total capacity

640 GB HBM3 total capacity

Widely certified across every Tier-1 OEM

Widely certified across every Tier-1 OEM

3rd-generation NVLink across board

3rd-generation NVLink across board

Enterprise AI Platforms integrate into diverse server designs, allowing organizations to choose the optimal form factor and features for their specific AI workloads and infrastructure requirements.

Start with a single HGX system and scale to multiple nodes as your AI initiatives grow, maintaining consistent architecture and software compatibility across your infrastructure.

HGX systems are supported by NVIDIA's complete software ecosystem, including CUDA, cuDNN, TensorRT, and domain-specific libraries for optimal performance.

| Platform | Architecture | GPU Count | Total Memory | Key Advantage |

|---|---|---|---|---|

| HGX B300 NVL16 | Blackwell Ultra | 16 | 2.3 TB HBM3e | 11× faster LLM inference vs H100 |

| HGX B200 | Blackwell | 8 | 1.44 TB HBM3e | 1.4 EFLOPS FP4 performance |

| HGX B200 | Hopper+ | 8 | 1.1 TB HBM3e | 1.9× faster Llama-2 70B vs H100 |

| HGX H100 | Hopper | 4/8 | 640 GB HBM3 | Proven workhorse, broad ecosystem |

Contact our team to discuss your AI computing requirements and learn how Enterprise AI Platforms Built by HPE, Lenovo, and Supermicro (NVIDIA HGX Systems) can provide the optimal foundation for your AI infrastructure, from single nodes to large-scale deployments.