NVIDIA DGX Systems

The world's most advanced AI supercomputing platform, engineered to accelerate breakthrough discoveries and transform industries through the power of artificial intelligence.

Modular, scalable AI infrastructure building blocks that provide a validated reference architecture for deploying DGX systems with integrated networking, storage, and management software.

Pre-validated hardware and software stack

Pre-validated hardware and software stack

Scalable from small clusters to enterprise deployments

Scalable from small clusters to enterprise deployments

Integrated high-speed networking (InfiniBand/Ethernet)

Integrated high-speed networking (InfiniBand/Ethernet)

Optimized for AI training and inference workloads

Optimized for AI training and inference workloads

Enhanced Hopper architecture with significantly increased memory capacity for large language models and complex AI workloads.

Up to thousands of DGX systems in unified architecture

Up to thousands of DGX systems in unified architecture

Exascale AI computing performance

Exascale AI computing performance

Turnkey deployment and management

Turnkey deployment and management

Proven reference architecture

Proven reference architecture

Purpose-built for AI with optimized hardware, software, and networking to deliver maximum performance for training and inference workloads.

Enterprise-grade reliability, security, and support with comprehensive software stack including NVIDIA AI Enterprise and management tools.

Trusted by leading AI researchers and enterprises worldwide for breakthrough discoveries and production AI deployments at scale.

AIdeology delivers the complete spectrum of NVIDIA DGX systems, from cutting-edge Blackwell architecture to proven Hopper-based platforms, ensuring your organization has access to the most powerful AI infrastructure available.

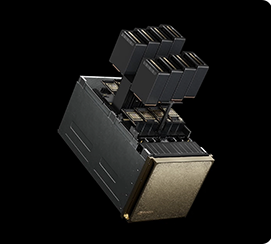

Next-generation Blackwell-based system delivering unprecedented AI performance and efficiency.

Eight B200 GPUs with 1440GB HBM3e

Eight B200 GPUs with 1440GB HBM3e

18TB/s GPU-to-GPU bandwidth

18TB/s GPU-to-GPU bandwidth

2.5× performance improvement

2.5× performance improvement

Enhanced Hopper-based system with upgraded H200 GPUs for significantly increased memory capacity.

Eight H200 GPUs with 1128GB HBM3e

Eight H200 GPUs with 1128GB HBM3e

7.2TB/s GPU-to-GPU bandwidth

7.2TB/s GPU-to-GPU bandwidth

1.5× more bandwidth than previous generation

1.5× more bandwidth than previous generation

Revolutionary Grace Blackwell system combining CPU and GPU in a unified architecture.

72 B200 GPUs with 13.5TB HBM3e

72 B200 GPUs with 13.5TB HBM3e

36 Grace CPUs integrated design

36 Grace CPUs integrated design

1.3 exaFLOPS AI performance

1.3 exaFLOPS AI performance

Grace Hopper superchip combining ARM-based Grace CPU with H100 GPU in unified memory architecture.

Grace Hopper superchip architecture

Grace Hopper superchip architecture

144GB unified memory

144GB unified memory

10× faster for large model inference

10× faster for large model inference

Our certified AI infrastructure specialists work with you to design the optimal DGX configuration for your specific workloads, performance requirements, and growth plans.

From site preparation to system commissioning, our experienced engineers ensure your DGX systems are properly installed, configured, and optimized for maximum performance and reliability.

Our support extends beyond deployment with ongoing system monitoring, performance optimization, software updates, and 24/7 technical assistance to keep your AI infrastructure running at peak efficiency.

Whether you need a single DGX system for your research team or a complete SuperPOD for enterprise-scale AI, AIdeology has the expertise and partnerships to deliver the perfect solution for your organization.